Getting to the Point: The Benefits of Extractive Summarization

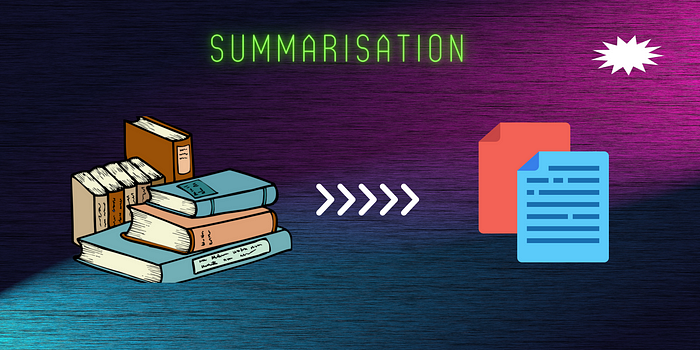

In Natural Language Processing (NLP), summarization plays a crucial role in reducing the length of long documents while preserving the most essential information. There are two main approaches to summarization:

- Abstractive summarization.

- Extractive summarization.

This article will thoroughly explore the extractive summarisation method and discuss its strengths and limitations. By the end of this article, readers will have a comprehensive understanding of the extractive summarisation approach and how it can be applied in real-world scenarios.

Extractive summarization

Extractive summarization is a method of summarizing a text document by selecting basic sentences or phrases from the original text and concatenating them to form a summary. It does not create new phrases or sentences but instead selects the most significant content from the original text. This method is known as “surface-level summarization” or “selection-based summarization”. Examples of extractive summarization include news articles, summaries of legal documents, and scientific papers. Extractive summarization can be used to quickly understand a large document’s main ideas and key points without having to read the entire text. This can save time and improve efficiency in many industries and applications, such as legal, medical, and business settings.

Approach to extractive summary

We will be performing extractive summarization of text using GloVe, a pre-trained word embedding model. Our approach to the problem can be divided into these broad categories.

- The text is first tokenized into sentences, and then a vector representation of each sentence is obtained by summing the vectors of each word in the sentence and dividing by the number of words.

- The similarity matrix between all sentences is calculated, and the sentences are ranked based on the sum of their similarity values.

- The top k sentences are selected and added to the summary, which is returned as a string.

Before we dive deep into coding, let’s understand what word embeddings are.

Word Embeddings

Word embeddings are a type of representation for words or phrases in which words that have similar meanings are mapped to points in a high-dimensional space. This representation allows words to be compared based on semantic meaning rather than just their surface form. Word embeddings are learned from large amounts of text data, usually by training neural networks or matrix factorization models.

glove.6B.100d.txt is a pre-trained word embedding model created by the Global Vectors for Word Representation (GloVe) project.

- The ".6B" part of the name indicates that the model was trained on a corpus of 6 billion tokens (i.e., individual words or punctuation marks),

- while the ".100d" part of the name indicates that each word is represented by a 100-dimensional vector.

The pre-trained model can also be utilized as a starting point for extractive summarization. With its training on a vast, diverse corpus, the model offers a deep understanding of words and their interactions, allowing for fine-tuning or using it as inputs for other models, leading to more accurate and concise summaries.

Delving into Details

Step 1: Importing the modules

We import the three modules: numpy, sent_tokenize from the nltk (Natural Language Toolkit) package, and the os module.

import numpy as np

import os

import nltk

from nltk import sent_tokenizeStep 2: Reading the word embeddings.

You can download the Glove word embedding from Kaggle.

Next, we read the file ‘glove.6B.100d.txt’ using the utf8 encoding and assigning the result to the variable ‘glove_file’.

glove_file = open('<path to your embedding>/glove.6B.100d.txt', encoding = 'utf8')Step 3: Initialize the model

Then we read the embedding file and create a dictionary called “model” where each word from the file is the key, and its corresponding vector of floating point values is the value. The vector is created by splitting each line of the file issued by the space character and converting the resulting list of string values to floating point numbers.

model = {}

for line in glove_file:

split_line = line.split()

word = split_line[0]

embedding = np.array([float(val) for val in split_line[1:]])

model[word] = embeddingStep 4: Extractive summarization using Glove

Next, we perform extractive summarization on a given text using Glove. We define the function summarization that takes in a string and a desired number of sentences as parameters. It first tokenizes the text into individual sentences. Then, it finds the vector representation of each sentence by summing the vectors of each word in the sentence and dividing by the number of words in the sentence. It then calculates the similarity matrix between all sentences, using dot products and norms. The sentences are ranked based on the sum of their similarity values, and the top k sentences are selected and added to the summary. The summary is returned as a string.

# Extractive summarization using Glove

def summarization(text, k):

# Tokenization

sentences = sent_tokenize(text)

# Find the vector representation of each sentence

sentence_vectors = []

for sentence in sentences:

if len(sentence) != 0:

vector = sum([model[word] for word in sentence.split() if word in model])/(len(sentence.split())+0.001)

else:

vector = np.zeros(100)

sentence_vectors.append(vector)

# Calculate the similarity matrix

sim_mat = np.zeros([len(sentences),len(sentences)])

for i in range(len(sentences)):

for j in range(len(sentences)):

if i != j:

sim_mat[i][j] = np.dot(sentence_vectors[i],sentence_vectors[j])/(np.linalg.norm(sentence_vectors[i])*np.linalg.norm(sentence_vectors[j]))

# Create a ranking of sentences in descending order

ranking = np.zeros(len(sentences))

for i in range(len(sentences)):

ranking[i] = np.sum(sim_mat[i])

sorted_rankings = np.argsort(-1*ranking)

# Select the top K sentences

top_k_sentences = sorted_rankings[:k]

top_k_sentences.sort()

summary = ""

for index in top_k_sentences:

summary += sentences[index]

return summaryStep 5: Read the text file

Finally, we read a text and then use the summarization function to summarize the text with a value of k = 3 and print the resulting summary.

# Read the text file

text = """Dougie Freedman is on the verge of agreeing a new two-year deal to remain at Nottingham Forest. Freedman has stabilised Forest since he replaced cult hero Stuart Pearce and the club's owners are pleased with the job he has done at the City Ground. Dougie Freedman is set to sign a new deal at Nottingham Forest . Freedman has impressed at the City Ground since replacing Stuart Pearce in February . They made an audacious attempt on the play-off places when Freedman replaced Pearce but have tailed off in recent weeks. That has not prevented Forest's ownership making moves to secure Freedman on a contract for the next two seasons."""

# Print the summary for the text

k = 3

summary = summarization(text, k)

print(summary)Final Output

Freedman has stabilised Forest since he replaced cult hero Stuart Pearce and the club's owners are pleased with the job he has done at the City Ground.Dougie Freedman is set to sign a new deal at Nottingham Forest.

Dougie Freedman is set to sign a new deal at Nottingham Forest.

That has not prevented Forest's ownership making moves to secure Freedman on a contract for the next two seasons.It sounds wonderful, but like everything else in tech, it has its flaws.

Limitations

The limitations of extractive summarization include the following:

- Inflexibility: Extractive summarization only selects and rearranges existing sentences and cannot generate new information.

- Lack of coherence: The selected sentences may not always make sense as a coherent summary and may require additional editing to improve readability.

- Bias: The summarization may be biased towards certain viewpoints, opinions, or sources that are over-represented in the original text.

- Inability to capture context: Extractive summarization may not be able to fully capture the context of the original text, leading to a loss of meaning.

To wrap up, this article discussed the extractive summarization method in NLP and how recent advancements in deep learning techniques have improved its accuracy and efficiency. It introduced GloVe, a pre-trained word embedding model, and provided a step-by-step process for performing extractive summarization using GloVe. By the end of this article, readers should have a comprehensive understanding of extractive summarization and how it can be applied in real-world scenarios.

In the next article, I will discuss Abstractive summarization and compare the two approaches and how the above limitations can be solved with the abstractive approach. Stay tuned!

Thank you for taking the time to read this article. Balancing grad school and writing can be a challenge, but I felt inspired to share my knowledge after taking a Natural Language Processing course last semester. For my final project, I chose to focus on summarization, and I hope that by writing about it, I can help others clarify their understanding of this topic.

It takes a lot of work to research for and write such an article, and a clap or a follow 👏 from you means the entire world 🌍to me. It takes less than 10 seconds for you, and it helps me with reach! You can also ask me any questions, point out anything, or just drop a “Hey” 👇 down there.